By Evronia Azer, Carlos Ferreira and Maureen Meadows, Centre for Business in Society

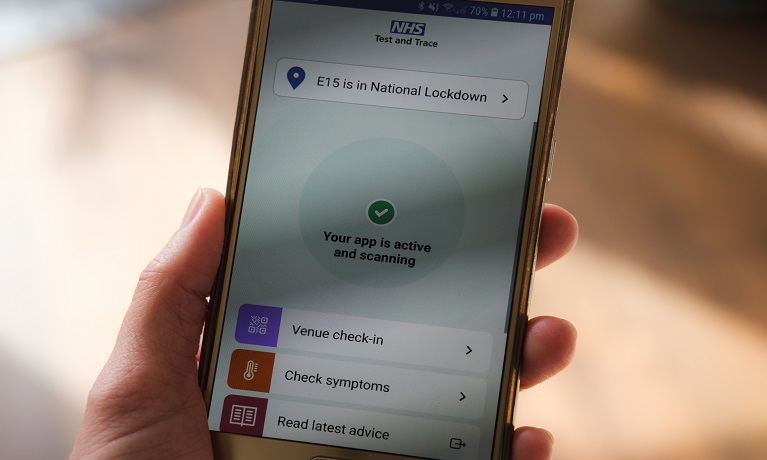

The NHS recently announced that its COVID contact tracing app will be discontinued on April 27, amid reports of falling use.

The app has been downloaded more than 31 million times since it was launched in 2020. But just over 100,000 of those downloads have taken place in 2023, perhaps an indicator of declining public interest as we learn to live with COVID.

The conclusion of this app offers an opportunity to assess where it succeeded and where it fell short of expectations. We may face public health challenges in the future which could benefit from similar technology, so it’s important to take lessons from this experience.

The app, launched after some delays in September 2020, has been part of the NHS Test and Trace system which was set up to alert people if they came into close contact with someone with COVID. Research has estimated that in England and Wales, in the app’s first year, it averted around one million COVID cases, corresponding to 44,000 hospitalisations and 9,600 deaths.

Research has also found that the percentage of people who tested positive after being identified as having been in close contact with a COVID case was roughly the same for the app as the manual test and trace system.

This evidence suggests that the app did the job it was intended to. However, it has faced a large amount of criticism since the outset.

What went wrong?

The app suffered from the weaknesses of any application depending on a single centralised system. If the system fell offline even briefly, it could cause major problems for users trying to board flights or enter venues.

Reports also claimed that the app didn’t detect iPhones well in its early days, and that it didn’t work properly on some other devices, for instance getting “stuck” on a loading screen.

As part of our research on the app, we conducted focus groups with users and non-users. We found many people were put off by apparent glitches in the app’s functioning. They talked about unclear or false notifications, such as alerts about possible exposures, which some found confusing or frightening.

Some users felt the app was rolled out too soon, without proper testing. They also felt there should have been wider and stronger communication to encourage uptake of the app, and explain its purpose, functions and benefits to the public.

Users’ expectations of the app were that it should be accurate and efficient, and not duplicate functionalities offered by other parts of the system. Some flagged that information provided by the app was typically available elsewhere, and were surprised that their vaccination records were held separately in another NHS app, rather than the COVID app.

The app operates without sharing users’ identities, therefore protecting their privacy. Yet concerns around the sharing of personal data were common.

The government sought to provide official reassurance that users of the app were not being watched. But trust had already been challenged by reports of classified documents pertaining to the app being inadvertently left unsecured online where the public could access them.

The app was also widely criticised for its frequent requests for users to self-isolate (the so-called “pingdemic”), which raised questions about the app’s effects on labour shortages. For certain participants in our research, if they couldn’t afford to miss work, they turned the app off to avoid notifications.

The importance of trust

For technical systems to succeed, they need to be trusted, and not resisted, by potential users. User trust is a broad issue, driven by a range of factors including the system’s accuracy, reliability, security, resilience, transparency, and alignment to users’ values and needs.

In other words, the technology needs to be judged as “legitimate” for the public to use it as intended. Legitimacy is achieved when a new technology is well understood, and judged to be a good fit with society. This will always be a challenge for contact tracing apps because of privacy and security concerns.

What’s more, political trust affects how people view technology deployed by governments. Political trust was shaky in the UK during the pandemic, and declining trust in government may have undermined the public’s attitudes to the app.

Our research shows that many users chose to adopt the app because they felt it was “the right thing to do” to help fight the spread of COVID and to protect themselves and those around them. From a more pragmatic perspective, the app allowed users to enter venues requiring check-in. But it didn’t always meet users’ expectations.

If a contact tracing app were to be deployed again, the government should bear in mind that public decision-making about technology goes well beyond the technical features of the system itself. The use of technology in public health can be valuable and ethical and can avoid wasted resources – but only if such systems are carefully and appropriately planned, designed, developed and tested.

The perception that the system doesn’t work properly or has been rolled out too soon, without proper testing, must be avoided. Effective communication should be adopted to encourage uptake, and the public should understand what any system does (and does not) do, and why. Finally, privacy concerns must always be taken seriously.

Evronia Azer, Assistant Professor at the Centre for Business in Society, Coventry University; Carlos Ferreira, Assistant Professor, Research Centre for Business in Society, Coventry University, and Maureen Meadows, Professor of Strategic Management, Coventry University

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Comments are disabled